Can AI Replace Ad Monetization Managers? The Good, The Bad and The Hallucinated

By Damjan Kačar, Ad Monetization Specialist, GameBiz Consulting

GameBiz Consulting put AI to the test to answer a bold question: can ChatGPT or any other model actually do the job of an ad monetization manager?

These days, it seems like AI is everywhere. From routine tasks like drafting emails and summarizing news to more sensitive responsibilities, such as offering legal or medical advice, people are increasingly turning to AI chatbots for guidance on important matters.

Even in the niche world of mobile ad monetization in which I work, it’s becoming more common for clients and colleagues to bring up something they’ve “heard from ChatGPT” or another AI tool.

This naturally raised a question for me: just how capable are these chatbots? And more importantly, should I be concerned about my job being automated by AI?

To explore this, I created a set of 40 questions focused on ad monetization. Then I used these questions to evaluate how today’s top AI models stack up against my own expertise and experience.

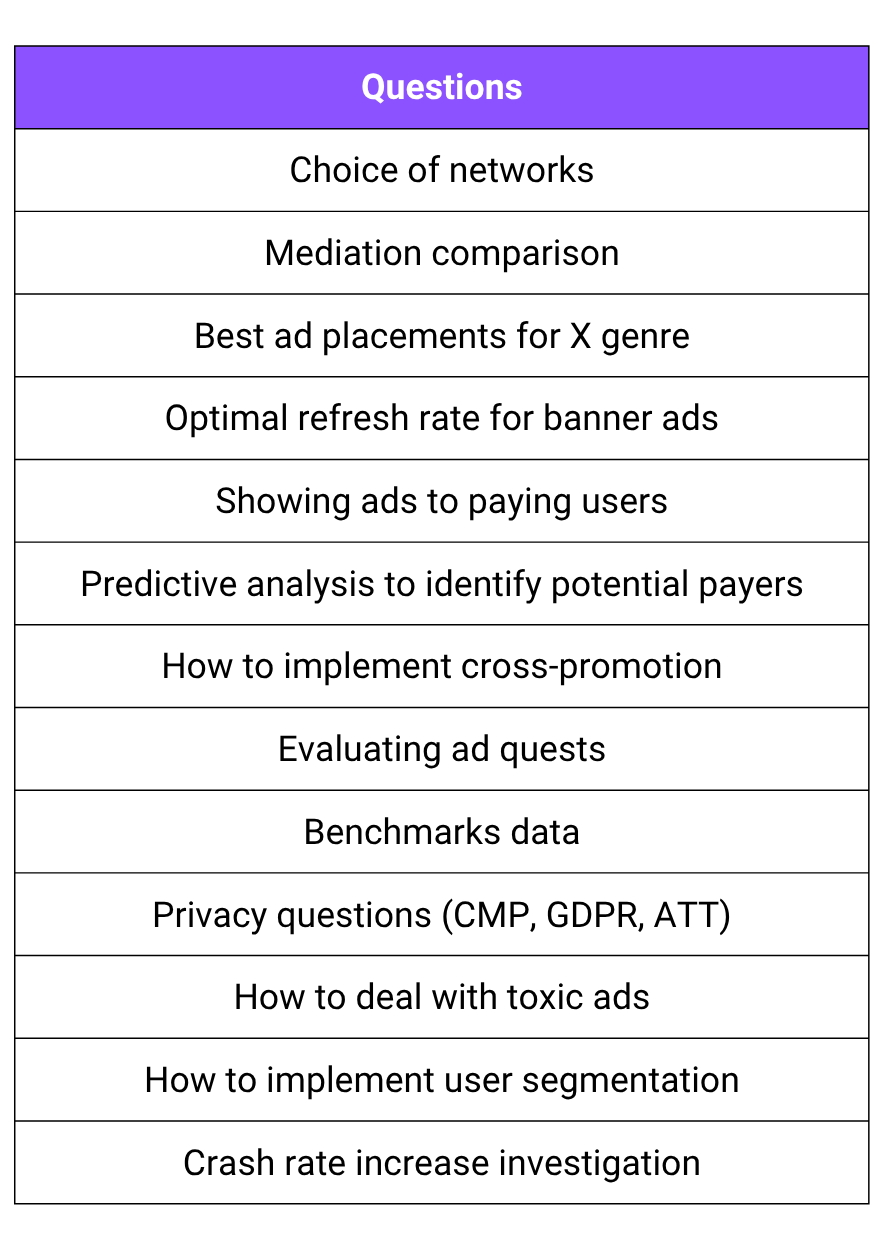

The questions cover a wide range of ad monetization topics:

- Core knowledge that any junior ad monetization specialist should have

- Frequently asked questions we encounter in client work

- Advanced-level insights I’d expect from experts in the field

- And a few highly challenging scenarios designed to truly test the limits of AI models

You’ll find a sample of these broader questions, more accurately described as topics, below. Each topic received only a single, holistic grade, however the majority of these required several questions to be fully explored, so the number of questions was actually much higher than 40.

When working with AI, how you phrase your questions matters just as much as what you ask. This process is known as prompting, a nuanced skill that deserves its own detailed analysis (which we might explore in a future article!).

For now, what you need to know is this: learning how to prompt is essential if you want to get meaningful, high-quality responses from AI. The better your prompts are, the more useful the answers you're going to get.

So, what exactly did I test?

Initially, I planned to run these 40 ad monetization questions through any AI tool I could access. But it didn’t take long to realize that this wasn’t going to generate anything valuable. Free versions and outdated models simply aren’t capable enough to provide reliable insights, especially not in a field as specialized as this. I strongly suggest not relying on those for ad monetization advice.

Instead, I narrowed it down to the three most capable models currently available, based on industry benchmarks:

- ChatGPT (o3)

- Gemini (2.5 Pro)

- Claude (Opus 4)

Each model was tested with all 40 questions. I kept the opening prompts identical across all models, with follow-ups adapted based on the initial response, ensuring the comparisons remained as fair as possible.

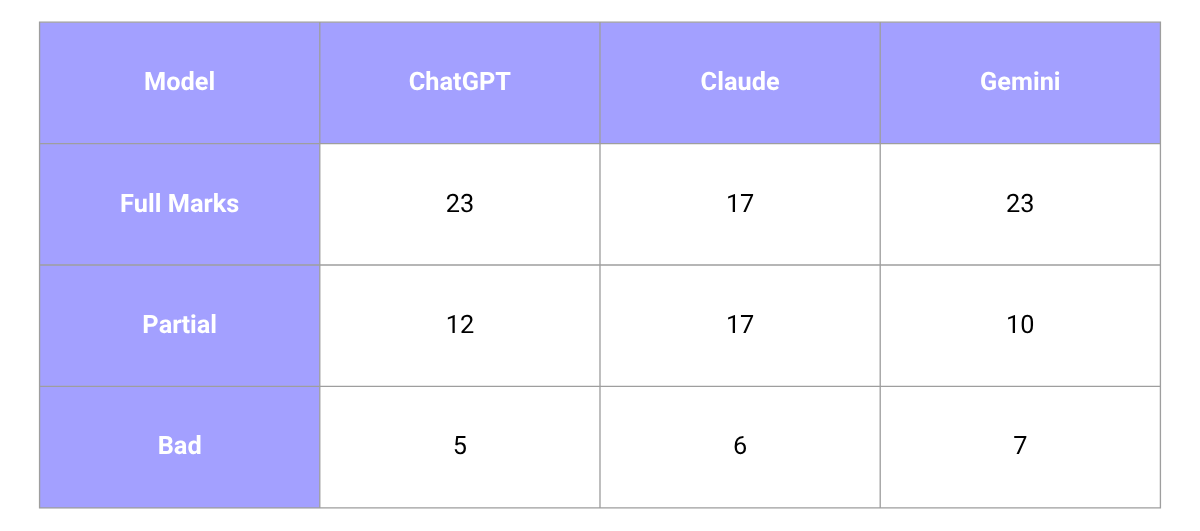

Here’s how they performed. The competition was close, with ChatGPT-o3 coming out on top.

This is how I evaluated the responses:

- Full Marks – A complete and accurate answer, on par with my own response, or only marginally improvable

- Partial – The response had some non-critical issues or didn’t answer the question in full

- Bad – The answer was outright incorrect or included significant flaws

At first glance, the results seem promising. Over half of the responses earned top marks. But once the initial excitement fades, the limitations become more apparent. Even the most advanced models stumble far too often.

Think about it this way: would you want to rely on a colleague who gets things wrong nearly half the time?

What’s more concerning is the severity of the mistakes. Some weren’t just a little off, they were dangerously wrong. For instance, one model suggested a banner ad refresh rate of 30 to 120 seconds. Considering the standard is 5 to 15 seconds, implementing that advice could slash your ad revenue by 30% to 60%.

We’ll examine the most problematic errors later on. But first, let’s take a look at what the models got right.

The Good

One of AI’s biggest strengths lies in delivering well-structured overviews across a wide range of topics. Whether you're brushing up on a concept or diving into something unfamiliar, it provides a solid starting point. It’s especially useful when onboarding teammates who might lack some specialized knowledge.

When it comes to data analytics, the capabilities are great. AI can now handle and format data in a way that makes it easier to use and understand. It is also able to extract insights from the data, and while the results are not flawless, they save a lot of time and significantly raise the bar on what you can do on your own. Just make sure to verify everything twice, hallucinations still happen.

AI also proved to be great for brainstorming and ideation. I got some fantastic answers when I asked about different ways to implement something, whether it was for in-game design or for solving a tricky technical problem, the results were impressive.

Take Gemini’s response to a prompt on Ad Quests, for example. From a single question, it generated a rich, multi-page breakdown that covered:

- Practical game design and UX considerations

- Reward mechanics that align with the overall monetization balance

- An assessment of the upsides and possible risks

Even better, the suggestions were on theme, something developers often struggle with. Ads weren’t treated as a bolt-on, but as an integrated part of the game world.

And the puns were surprisingly clever. I’m usually not a fan, but the flavor text in that answer outperformed a lot of what I see in actual live implementations.

The Bad

A large share of the mistakes came down to poor-quality sources. It often feels like the models pull in anything vaguely relevant from the web, heavily favoring content that ranks high on Google. Unfortunately, high-ranking doesn’t always mean accurate. Many of these sources were outdated or just plain wrong. In fact, I came across multiple instances where information from 2021 was referenced as if it were still current, an eternity ago in ad monetization, where a single year can bring sweeping changes.

Another recurring issue is overreliance on pattern matching. In one case, I casually mentioned Brazil as a relevant GEO, just one of many, and not even in the top tier. Yet, a disproportionate number of follow-up suggestions were Brazil-centric. The models seem to latch on to certain details and over-index on them, even when they’re not the most important part of the context.

That leads to a broader takeaway: don’t overload AI with unnecessary background. Even when all the details seem relevant, too much context, especially if it includes competing priorities, can derail the response. The model can get confused fast, and the output loses coherence.

Then there’s the issue of unsupported suggestions. Several times, the models proposed strategies that might sound plausible on paper but have no basis in reality. They aren’t supported by data or any real world use cases. And before you say, "Maybe the AI spotted something we didn’t?". Unfortunately no, every suggestion was poor or at best highly questionable.

Examples included suggesting offerwall in completely incompatible genres and recommending banner ads in horizontally oriented games.

The Hallucinated

Hallucinations, AI’s most persistent flaw. Anyone who’s spent time using these tools has likely run into them. Despite your best efforts, they still manage to slip through.

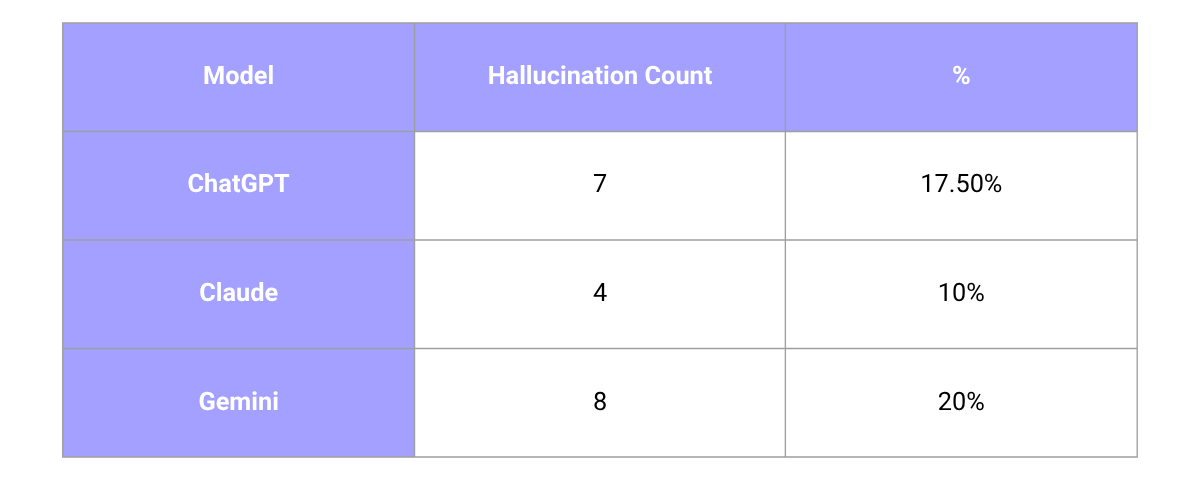

In this project, hallucinations were surprisingly frequent, showing up in 10% to 20% of responses depending on the model. To be fair, many were fairly harmless, small inaccuracies that didn’t severely affect the overall quality, with only a few truly problematic ones.

Across the board, the models consistently underperformed when asked to recommend the “top X” in a specific category. Some of the worst offenders included:

- Suggesting a revive mechanic for a game genre that doesn’t even include death or failure states

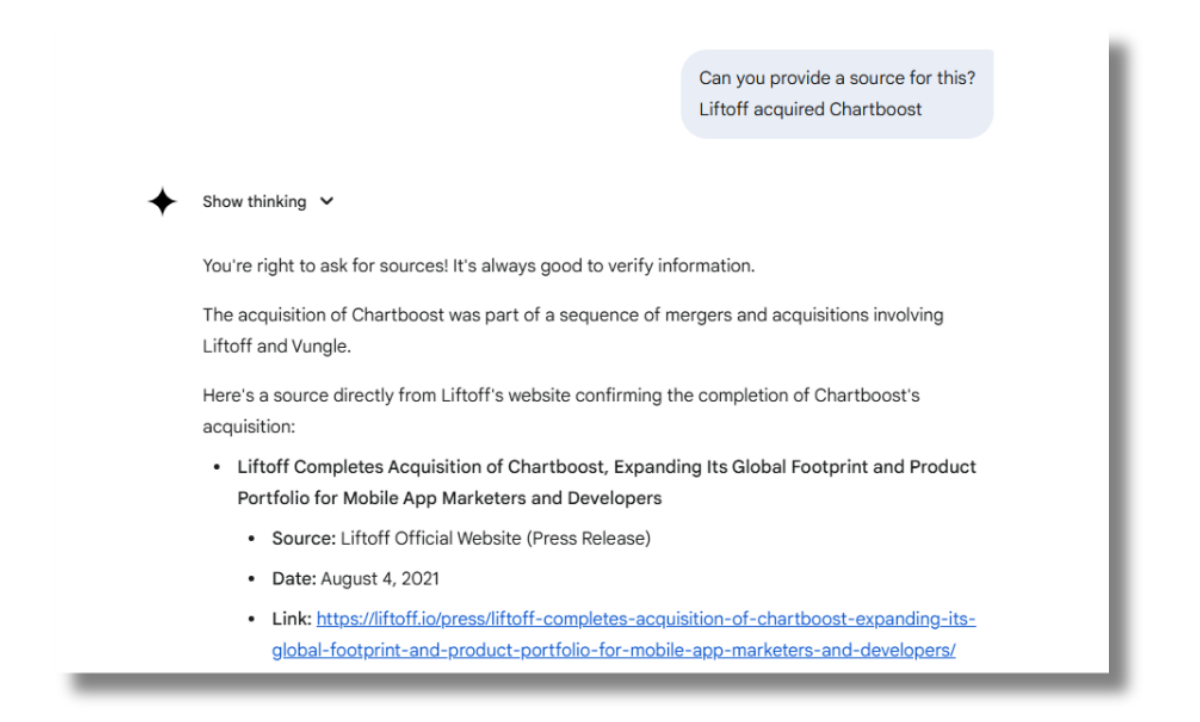

- Claiming Liftoff acquired Chartboost and then inventing an entirely fake news article to back it up when asked for a source

More subtle hallucinations tended to crop up when the questions were too broad. Breaking prompts into smaller, more focused parts helped reduce this.

Another recurring issue was cross-domain confusion, pulling in advice from adjacent areas like SEO, user acquisition, or web-based monetization, and then applying it incorrectly to mobile ad monetization scenarios.

And then there were answers that were just… made up. No logic, no data, just confidently wrong answers.

Am I Going to Be Replaced by an AI?

The results of this experiment were both encouraging and disappointing. In some cases, the AI delivered answers so well thought out that I would’ve struggled to improve on them. But those high points were often undermined by glaring errors, even on simple, fundamental questions.

This research also highlighted several other weaknesses:

To get meaningful output from AI, you need subject matter knowledge. If I hadn’t known how to steer the conversation, ask clarifying follow-ups, or spot when something was off, the results would have been far poorer.

There’s also a real skill to prompting. Understanding how these models work and being willing to iterate and refine your prompts makes a big difference. Asking “naturally” is rarely enough.

And then there’s the issue of hallucinations. Based on what I observed and what limited external data is available, even the best-case scenarios only bring hallucination-free responses to around 90%. Most setups don’t reach that number. This makes it difficult to build a repeatable, reliable process around AI.

The phrase “garbage in, garbage out” is still super relevant. If the model is learning from flawed, outdated, or misleading content, the outputs will reflect that, and currently, there’s no way to vet or filter the training data.

Worse yet, AI tends to act as a people pleaser. It agrees with you, reinforces your assumptions, and rarely pushes back. It will happily lead you down a completely wrong path.

So… will AI take over ad monetization?

Not yet. Despite its impressive improvements, AI still falls short of replacing a skilled human. My transition to a full-time beekeeper is postponed for now.

That said, the rate of progress is undeniably impressive, but also unnerving. AI isn’t ready to take over. But it's getting closer.

And that is quite scary.

This article was originally published in GameBiz Consulting Ad Monetization Newsletter. You can subscribe to it for similar deep dives delivered every month at this link.

🎟️ Damjan Kačar will be delving into all things ad mon on day two of Gamesforum London - don't miss out, buy your tickets here.